In the rapidly evolving world of open-source artificial intelligence, new combinations of models and frameworks appear almost weekly. One of the more intriguing pairings in recent months is AgenticSeek powered by DeepSeek R1 30B. Positioned as a reasoning-focused, agent-capable system built on a large but still locally deployable model, it promises a blend of autonomy, analytical ability, and practical performance. But how good is it really in practice?

TLDR: AgenticSeek with DeepSeek R1 30B delivers impressive reasoning performance for an open 30B-parameter model, especially in structured problem-solving, coding, and multi-step tasks. It shines when configured properly and paired with good tools, but it demands strong hardware and careful setup. For advanced users and developers, it offers tremendous value; for beginners, it may feel complex. Overall, it is one of the more capable mid-sized open models currently available.

To understand whether this combination is worth the hype, it is necessary to examine its architecture, performance, real-world usability, strengths, weaknesses, and how it compares to alternatives.

What Is AgenticSeek?

AgenticSeek is not a foundation model itself. Rather, it is an agent framework designed to add structured reasoning, tool usage, workflow planning, and iterative problem solving on top of a base large language model (LLM). In essence, it transforms a static text generator into a more autonomous system capable of:

- Multi-step reasoning

- Tool invocation (like code interpreters or search)

- Memory handling

- Self-correction loops

- Task decomposition

When coupled with DeepSeek R1 30B, the framework leverages a strong reasoning-oriented model trained to compete directly with higher-end proprietary systems in logical and mathematical domains.

Understanding DeepSeek R1 30B

DeepSeek R1 30B is a 30-billion-parameter reasoning model designed with enhanced chain-of-thought capabilities. It focuses heavily on structured logic, math, coding, and analysis. Unlike some smaller instruct-tuned models that prioritize conversational fluency over depth, R1 30B leans into deliberate reasoning and structured solutions.

Key characteristics include:

- Strong logical inference across multi-step problems

- Competitive coding output for a 30B model

- High reasoning transparency through step-by-step outputs

- Better hallucination control compared to many similarly sized models

Thirty billion parameters place it in a sweet spot: significantly more capable than 7B or 13B models, but still potentially runnable on high-end consumer GPUs or optimized inference environments.

Performance in Reasoning Tasks

The real question is whether AgenticSeek meaningfully enhances DeepSeek R1 30B or simply adds overhead. In most advanced evaluations, the answer leans toward enhancement.

When solving:

- Complex math puzzles

- Algorithmic challenges

- Multi-step decision workflows

- Research-style breakdown tasks

The agent structure encourages the model to:

- Break problems into smaller pieces.

- Check intermediate steps.

- Re-evaluate outputs before finalizing answers.

This greatly improves reliability. Without an agent loop, the model can still reason well, but it may jump prematurely to conclusions. With AgenticSeek, iterative refinement reduces such errors.

However, the trade-off is speed. The agent process introduces latency, as multiple calls and reasoning loops take additional time.

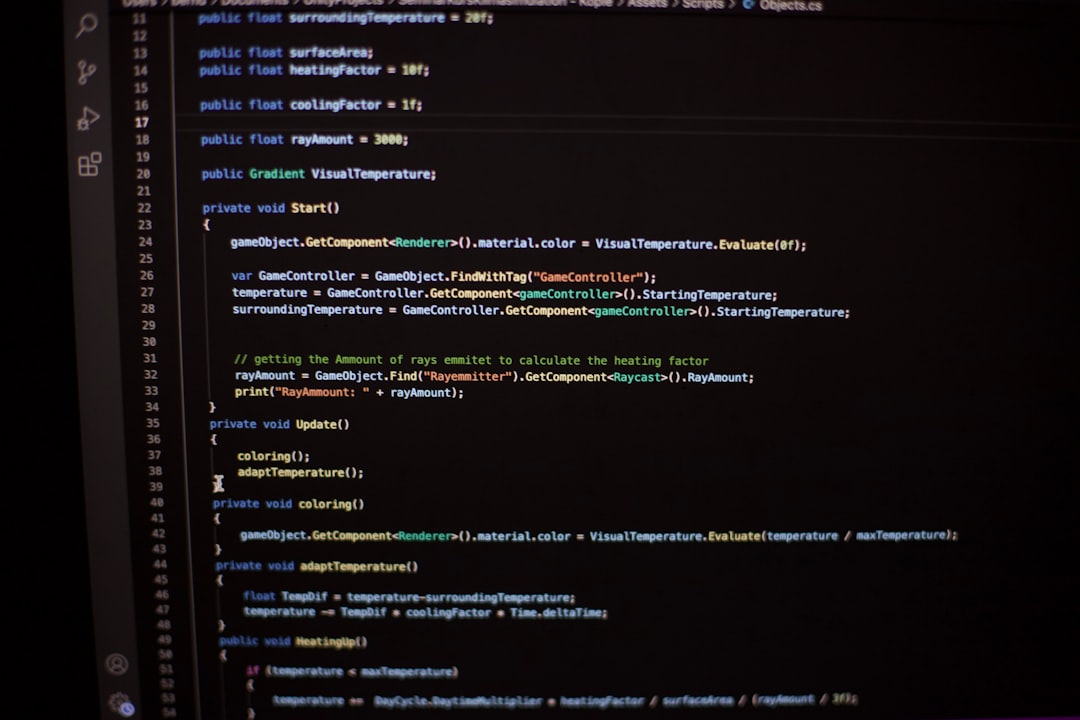

Coding Capabilities

Developers often judge a model by its coding performance. Here, AgenticSeek with DeepSeek R1 30B performs impressively.

Strengths include:

- Clean structural output for functions and modules

- Step-by-step debugging reasoning

- Effective error explanation

When integrated with code execution tools, AgenticSeek can:

- Generate code

- Test the code

- Diagnose failures

- Refactor automatically

This iterative loop dramatically boosts reliability compared to one-shot code generation. For developers working locally or in private infrastructures, this combination competes surprisingly well with select closed-source models.

Hardware Requirements and Practical Limitations

Thirty billion parameters are not trivial.

Running DeepSeek R1 30B effectively usually requires:

- Multiple GPUs, or

- A very high-VRAM enterprise GPU, or

- Heavy quantization (with some quality trade-offs)

Even with quantization, users may need:

- 24GB+ VRAM for comfortable deployment

- Optimized inference engines

- Careful configuration of context window sizes

AgenticSeek further increases memory usage due to tool orchestration and intermediate states. Therefore, this pairing is not beginner-friendly from a hardware standpoint.

This is one of its clearest weaknesses: accessibility. Smaller models are easier to deploy. Cloud APIs from major AI providers are even easier. In contrast, this setup demands technical proficiency.

Use Cases Where It Excels

Despite technical complexity, AgenticSeek with DeepSeek R1 30B shines in specific environments.

1. Private Enterprise AI

Organizations that require on-premise deployment due to compliance or security regulations benefit enormously.

2. Research and Experimentation

Researchers who need full control over reasoning traces and model internals gain transparency that proprietary APIs often restrict.

3. Advanced Automation Agents

Companies building semi-autonomous AI agents for internal workflows can customize behavior extensively.

4. Coding Copilot Alternatives

For developers who prefer local or self-hosted solutions, this pairing offers impressive independence.

Where It Falls Short

No system is perfect. The main drawbacks include:

- Setup complexity

- High computational requirements

- Slower responses in agent mode

- Less conversational polish compared to some heavily RLHF-tuned models

While reasoning is strong, conversational fluidity can feel slightly mechanical. For users looking for empathetic dialogue or creative writing brilliance, other models may feel smoother.

Additionally, agent loops must be carefully configured. Poorly tuned recursion limits or tool usage strategies can produce inefficiencies.

Comparison With Other Models

Compared with smaller open models (7B–13B), DeepSeek R1 30B clearly dominates in reasoning depth.

Compared with much larger proprietary models:

- It may lose in raw breadth and conversational nuance.

- It remains surprisingly competitive in structured logic tasks.

Its greatest advantage lies in the balance of:

- Open accessibility

- High reasoning performance

- Agentic extensibility

This makes it particularly attractive to serious AI builders rather than casual users.

Is It Worth It?

So, is AgenticSeek with DeepSeek R1 30B any good?

The answer depends on perspective.

For AI enthusiasts and developers: It is very good. It offers meaningful reasoning, customization, and autonomy capabilities without complete reliance on closed APIs.

For businesses with infrastructure: It can be an excellent private AI backbone.

For casual users: It may be overkill and technically intimidating.

In short, it is not the easiest system — but it is among the more powerful mid-tier open solutions available today.

FAQ

1. What makes DeepSeek R1 30B different from other 30B models?

DeepSeek R1 30B is heavily optimized for logical reasoning and structured thinking. It emphasizes multi-step problem solving more than many general conversational models in the same size class.

2. Does AgenticSeek improve model accuracy?

Yes, especially for complex tasks. By introducing iterative reasoning loops, tool usage, and task decomposition, it reduces premature or shallow answers.

3. Can it run on a single GPU?

It can, but typically only with quantization and a high-VRAM GPU (24GB+). Performance may degrade depending on optimization choices.

4. Is it better than closed-source AI like GPT models?

In conversational fluidity and scale, it may fall short. In structured reasoning and local control, it can compete surprisingly well.

5. Is it suitable for beginners?

Not ideal. The setup and hardware requirements require technical knowledge. Beginners may prefer managed AI platforms with simpler interfaces.

6. What are the best use cases?

Private enterprise deployment, research experimentation, coding automation, and custom AI agent development are among its strongest applications.

7. Does it hallucinate?

All language models can hallucinate. However, DeepSeek R1 30B combined with agent-based self-checking tends to reduce error rates in logical domains.

Ultimately, AgenticSeek with DeepSeek R1 30B stands as a powerful tool for those willing to harness it properly — demanding, complex, but capable of delivering remarkably strong results in the right hands.